Anyone today can go to one of the available AI assistants or chat bots and use natural language to try and get an Infrastructure-as-Code (IaC) solution that fits their requirements. Anyone that has already tried this knows that you are more likely to get an invalid solution or one that has one or more "invented" pieces of information; things that don't really exist and are totally made up (even though they might seem at first blush to be authentic!).

🌞 If you've been directed to this blog post by a search engine because you entered keywords related to working out or personal training, then you will likely be disappointed to learn that this blog article has nothing to do with either...

ARM, or Azure Resource Manager, is the deployment model (and cloud architecture) used in Microsoft's cloud, known as Microsoft Azure. Bicep is a domain-specific language (DSL) that is used for composing blueprints that can be used for constructing cloud resources in the Microsoft Azure cloud. It just happens that when both names are used in the same sentence, things get a bit... punny.

The following 5 tips are based on lessons we've learned over time. They are followed by a more detailed explanation of how we discovered those tips and used them to overcome the challenges we've encountered in order to get the solutions we needed.

- Fast does not necessarily mean good, valid or correct: General purpose models can be good (and fast) in many scenarios, but they usually suck in specific scenarios where there is some level of specialization required that is not entirely (or adequately) present in the training data set. The speed sometimes comes at the cost of invalid, made-up results. A great example of the risks of focusing on fast and ignoring valid is a news story from early 2024 about an IaC vendor where they generated thousands of solutions based on their AI assistant and hosted them online only to find out (after Google had already indexed them) that a large percentage of their results were hallucinations.

- Not all problems can be solved with good Prompt Engineering: Prompt Engineering is great, and well-crafted prompts (system or user prompts or monoloques) can be the difference maker between a really good result and one that is seemingly good, but is really, really bad. Where Prompt Engineering usually makes a difference is in well-designed system prompts that make the end-users life easier so that there is no need to spend too much energy on coming up with the perfect question.

- The cost of well-tuned vs. general purpose: Fine-tuning is not a free process. And other methods of grounding general-purpose models using techniques like RAG still require adequate data. This is good and important (and well worth in terms of ROI) if you are performing these actions for your own domain. But it becomes an additional overhead if you have to do it for things that fall outside your core domain. For example, if your domain is healthcare, it does not make sense to spend energy fine-tuning for Azure DevOps or Azure IaC.

- Service-level agreements (SLA) are critical: What good is a solution that gives you some results if it cannot be backed by an SLA? How can you trust it? This is one key area that we've noticed was often neglected or completely ignored.

- Domain-specific problems DO require a domain-specific solution: This was our key realization after spending so much time working on different AI-based solutions. We will elaborate on that in a bit.

- Bonus Tip: Data scientists are vital, especially for non-trivial projects. With the current proliferation of GenAI and LLMs, some have attempted to convince themselves that data scientists are no longer important in order to save on their AI budgets. Wrong move! Some even started claiming that all you need is great Prompt Engineering experts. Actually, the opposite is true. Data scientists are still in need, perhaps more now than ever before. DON'T embark on a serious AI project without having a data science team and engaging them. It's the right thing to do.

Natural Language & Native Azure IaC (Bicep, ARM)

When using natural language for composing native Azure IaC, i.e. Azure Resource Manager (ARM) and Bicep templates, you'll notice that as the questions get more complicated, the likelihood of getting answers containing solutions based on partial or full hallucinations gets a lot (a lot!) higher. Some folks rely on their Prompt Engineering skills to try and compose a super-duper sophisticated question in order to "guide" the AI agent into getting you the solution you so desperately desire. That seems a little backwards, doesn't it? AI's promise (and one of its key premises) is to make things easier, not harder. In olden times, one had to learn a programming language in order to get a problem solved; they wrote computer programs. Then those programs were made reusable so one doesn't have to reinvent the wheel each time. Programs became domain-specific because they dealt with a specific domain or set of problems. Today, you use natural interactions (keyboard and mouse input, natural gestures, voice commands, etc) with domain-specific computer programs and get the solutions you need, pertaining to the particular domain the program supports and specializes in. You don't have to learn a new programming language or new set of rules for interacting with the software programs, do you? So why, then, would you have to learn a new way of crafting questions, the so-called Prompt Engineering, in order to solve your problem.

This was one of the problems we noticed early on when we started to use generative AI and leverage transformer architecture-based models, namely Large Language Models (LLM), to try to solve some of our hardest problems. We were trying to build really large and sophisticated ARM templates and their corresponding UI definitions needed for building Azure Applications, the sort of things you find and use on the Azure Portal when you create a resource (that's first-party, Microsoft, Azure Applications) or install an Azure Marketplace solution (that's third-party Azure Applications). We've tried everything from fine-tuning (ROI is quite low relative to the cost) to RAG (Retrieval-Augmented Generation) and tinkered with different ways to design our architecture.

That's when we realized what we needed was a domain-specific AI agent that is grounded in the knowledge of our specific problem space, the domain of Azure native IaC (Bicep and ARM). Nothing new there as that's quite expected. But we also realized that we needed to create guardrails so that the solutions were not only domain-specific but also spot on and valid. And of course, it would be lovely if that AI agent can be super-fast and not cost us an arm (no pun intended) and a leg.

While useful in many scenarios, traditional RAG was not adequate in our case, and we only saw modest gains. Techniques like LoRA were closer to what we needed, and we eventually ended up investing in building our own proprietary adapter technique called FIRE (Fractal Injection Re-Encoder) that we use today as the key component in our overall architecture.

In essence, if we were to be able to build a solution that we can trust that it will give us sane, valid and accurate results, we have to be able to back it by an SLA (Service-Level Agreement). Short of that, anything we build will need to have the same disclaimer a lot of the AI assistants have today like "use at your own risk" or "your mileage may vary". It turns out that once we built the right, domain-specific, "guardrail" tools and architecture (much of which we had to build anyway for our Maestro Studio ENSEMBLE product), achieving the reliability we needed (and having an SLA) became possible.

Maestro Studio AI

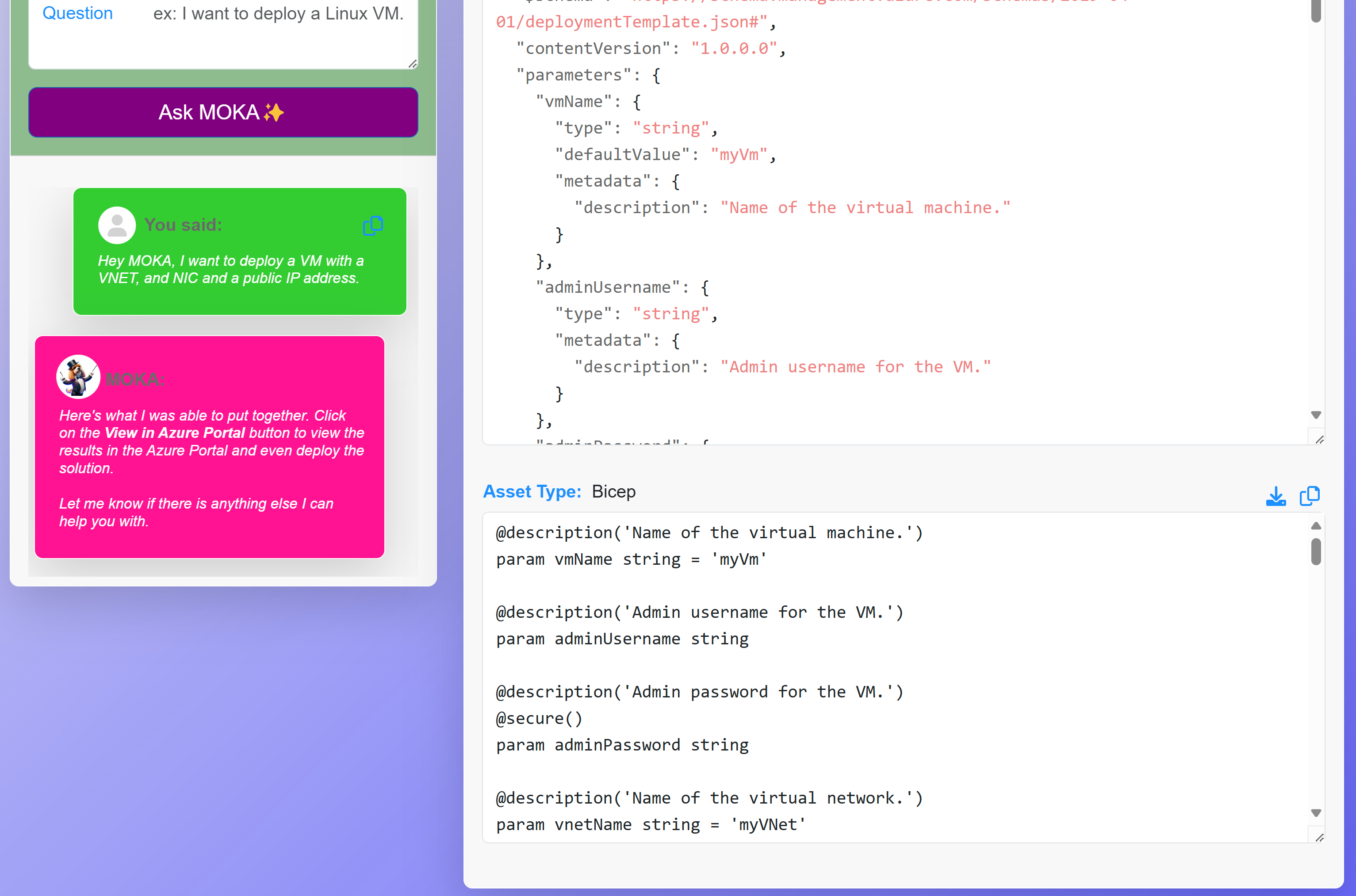

These 5 tips have been instrumental in driving us from getting a high percentage of hallucination out of our AI system into being able to reduce the incorrectness rate to a small percentage which allowed us to be able to back our solution by a decent SLA. This is how Maestro Studio AI, shown in the screenshot below, was born.

Learn more

We're always looking for feedback. If you have any feedback you'd like to share, please email us at support@stratuson.com or use our Contact Form.